Agentic Coding Field Report: Building Fatebook MCP

What I observed in my most aggressive experiment with agentic coding tools yet.

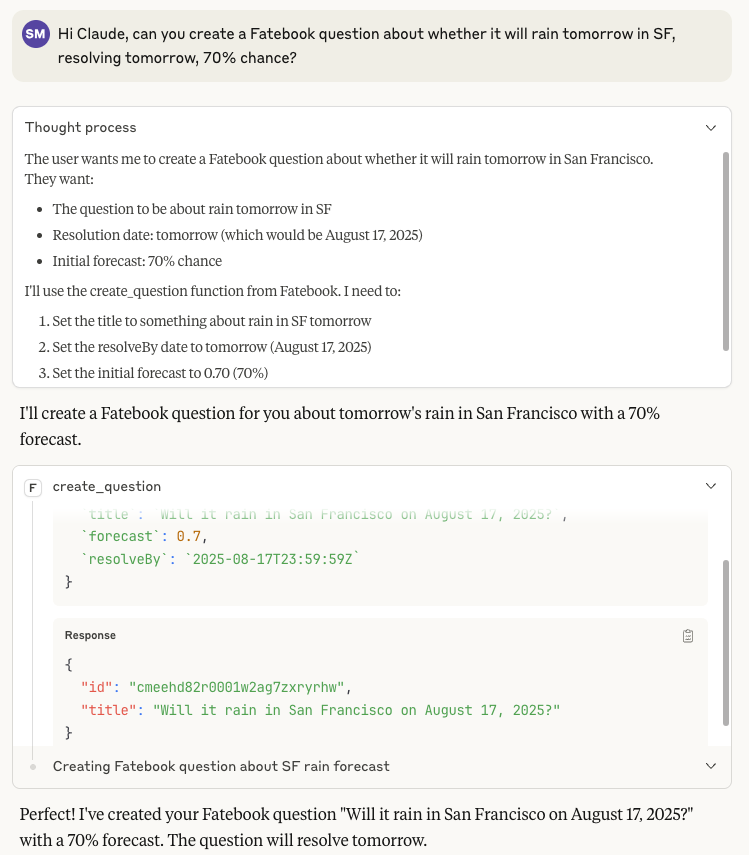

Like all the cool kids these days, I made an MCP server1. It gives AI tools like Claude and Claude Code the ability to query, create, and edit Fatebook predictions. For me, this is a big win because I like making predictions and scoring them, both of which I find LLMs to be increasingly valuable collaborators on.

But this post isn’t about why you should make predictions or the details of the MCP implementation. If you are one of the few people actually interested in using the server or understanding its technical details, the documentation is for you! Instead, the rest of this post contains some musings on my experience building this server. I’ll discuss why I built it, how I built it, and what I observed in doing so.

Why build the server

Besides the already mentioned reasons, I saw this as an opportunity to lean as heavily as possible on AI tools without having to worry about breaking something important or generating too much tech debt. Instead, this gave me an opportunity to dive head first into an agentic development workflow and see where it did and didn’t work.

How I built it

Tooling

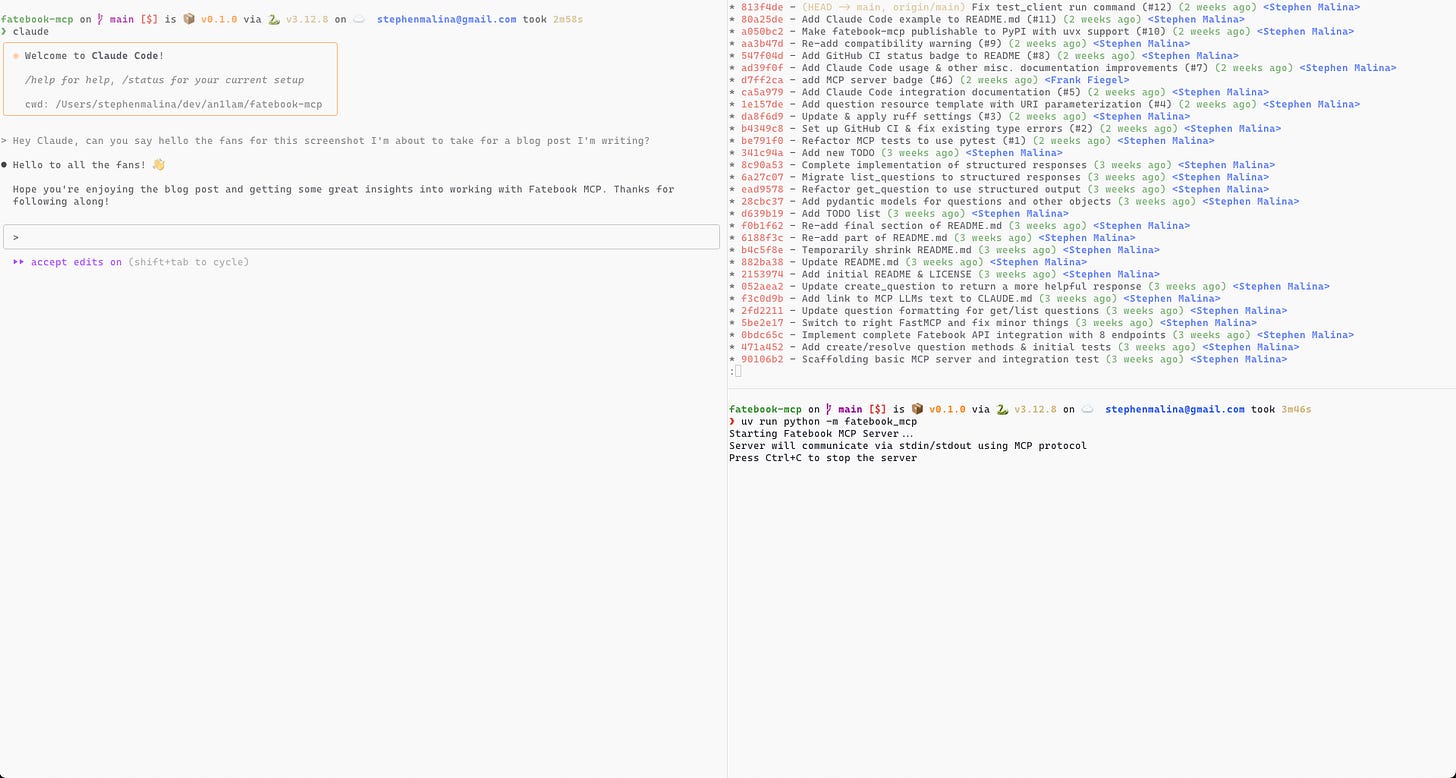

Besides the usual tools (Git, terminal, etc.), I relied most on Claude Code for writing and Zed for reading and editing. I chose Claude Code because it works well with my terminal-heavy workflow, I like Claude models, and it remains the most popular tool amongst developers I trust. On the first point, I can run it alongside other terminal panes for testing, Git operations, and miscellaneous tasks. This matters to me since I've spent years in (Neo)vim and Unix environments.

For most tasks, I'd have Claude Code in one pane, the server or tests running in another, and a third for Git and other operations. While some people run multiple Claude Code instances in parallel, I haven't mastered this yet. I find I still need to pay close attention to keep each instance on track.

Model-wise, I mostly used 'Plan Mode' (Opus 4 for planning, Sonnet 4 for implementation) once I discovered it, which helped preserve my Opus quota for the hardest conceptual work. My system prompts (user-level here, project-level here) are fairly minimal, mainly discouraging Claude's worst habits like emoji overuse and excessive try/except blocks. So far, I've found that clear task specification and good repo structure matter more than prompt engineering.

One unexpected tool choice: despite being comfortable in Neovim, I found myself gravitating toward Zed (with AI features turned off) for code review. Something about reviewing AI-generated code in my usual terminal setup created subtle friction — perhaps the aesthetics, or having tabs and a file browser readily available. Whatever the reason, making code review as frictionless as possible ended up being a big win when dealing with Claude's rapid-fire output.

Workflow

So that’s the setup I used. In terms of how I developed and iterated, my typical workflow was:

Figure out what my next task to work on was by looking at my to-do list (a Markdown file). Depending on where I was, this would sometimes involve adhoc design/research, for which I’d also treat Claude as my pairing partner. For example, after developing the initial tool-based implementation, I experimented with adding a predictions “resource”. Figuring out whether and how to do this involved a bunch of reading interleaved with discussion and debate with Claude.

Once I’d figured out what to work on, I’d write out as clear a task specification I could and give it to Claude Code in “plan mode.” I generally tried to structure the work such that each plan encompassed one self-contained feature that could be packaged into a single pull request. Plan mode requires Claude to present a plan to the user (me) and get approval before switching into execution mode, so often I’d go back-and-forth with Claude a few times for approving its plan. Most often, my comments would look something like, “this overall looks good, but did you consider X” or “let’s axe this part for now as the rest feels like a lot already.”

From there, Claude would get to work and I’d pay close attention to what it was doing, often interrupting it, or queueing up questions about what it was doing. In an ideal world, I’d also be thinking about something relevant or actively reviewing its output in the editor. In practice, sometimes I’d get distracted while it worked and then come back upon being notified it needed me. Once we’d iterated towards a reasonable initial implementation, I’d usually become more involved again as part of testing, reminding Claude to run automated tests and asking it for suggested manual tests that I could do myself.

Upon completion of implementation, I’d ask Claude to commit and push changes. Early on, I just developed on the

mainbranch, but at some point changed over to having Claude create PRs, which I’d review.Upon merging a PR, if I thought there might be something to learn from the back-and-forth, I’d ask Claude if it wanted to make any changes to its documentation based on this work. If it did, I’d let it do that. If not, I’d clear the context and move on to the next thing.

To a large extent, this is just the workflow I use to develop myself and with collaborator(s) adapted to Claude being somewhere in between a tool and a collaborator. Overall, I’d say it works ok, with the biggest source of friction being around both intermediate and final review. (I’ll discuss this more at the end of the post.)

Observations

Now I’ll switch gears and make some observations about what Claude Code did and didn’t do well as part of this project. These observations come with all the caveats of this being a relatively small, personal project working in an area — web development — that is likely relatively familiar for Claude. That said, all the observations here match with things I’ve even more anecdotally observed using models for development for several years now (across various tools), so I suspect most of them to generalize reasonably well to current models being used in other settings.2

A fun fact about this section: I waited long enough to write it that I became worried that I didn’t actually remember where Claude did & didn’t do well. To deal with this, I ended up having Claude help me process all the raw transcripts from its work (~20 sessions) on Fatebook MCP into a readable format and then reading through them and taking notes on its successes and failures. I know it’s my fault that I had to do this in the first place but needless to say it was not the most fun part of the development or writing process…

What Claude (Code) did well

In terms of overall performance on this project, I’d give Claude an 8 or 9 out of 10. It wrote the vast majority of the code in the repo, mostly without help from me, and without requiring any major refactors of the service itself. Here are some rough numbers to back this up:

~5/34 commits in the repo include meaningful amounts of code written by me.

~10/299 messages involved “serious” interventions3.

Claude sent 1,245 messages, I sent 523, giving us a Claude-to-User Ratio: 2.38:1

As the above suggests, unlike I’d observed with previous model generations, Claude Code only got stuck in inescapable spirals maybe 3-5 times, and in those cases I was able to help it out with relatively small nudges. While there's definitely room for improvement, this high score reflects real progress. Claude handled everything from Python packaging intricacies to learning new APIs on the fly, often surprising me with its competence.

Claude’s encyclopedic but flexible knowledge of the Python ecosystem really impressed me. I know people often claim that these models shine at memorization, but that their weight-level memories are rigid, but I don’t share this impression. Having never published a package to a public PyPi server before, I relied heavily on Claude’s existing understanding of Python packaging and registration, augmented with its reading of documentation (in context) and found it to be an indispensable helper. While it would occasionally get subtle things exact arguments to packaging commands wrong, it showed a strong understanding of how the Python packaging ecosystem works, which saved me a ton of time and angst in testing and eventually publishing Fatebook MCP.

This project was also a good opportunity to see how well Claude dealt with relatively recent tools and packages with lots of ongoing development. Fatebook MCP uses uv for package and environment management and is built on top of the mcp Python package. Both were first published in 2024 and have been under active development since then. This means that some version of their documentation likely appeared in the Claude 4 training corpus, with its reliable cutoff January 2025 (source), but have continued to evolve rapidly since then.

Claude effectively incorporated new information about these packages and tools as long as I provided it with links to their documentation. This sometimes included “browsing” by navigating to additional, self-selected links. For example, the FastMCP server we used has been under substantial development throughout 2025, and Claude was able to understand and incorporate its understanding of how the MCP tool decorators had evolved in that time by reading its documentation and code snippets from it. As with packaging, it did occasionally mix up command line arguments, presumably relying on stale understanding of those commands. This really highlighted how good, easily findable and navigable (as text) documentation is so important in the age of these tools.

Finally, Claude writes pretty solid code these days. Yes, it loves try/except too much and yes, it seems to somehow have gotten it in its (GPU) head that people use emojis in logging statements way more than they do. But besides that, I rarely noticed it including obvious code smells and the right system prompt does seem to help most of the time. It didn’t wow me with the strokes of elegance that you might find in Raymond Hettinger’s code, but offsets this by being more capable of sticking to a surrounding style than past models, a huge component of being a good collaborator in any real codebase.

Where Claude (Code) did less well

Model(s)

If I were to summarize Claude’s (technically a mix of Sonnet and Opus 4, so models’) deficits in one sentence, I’d say that it still struggles with keeping a big picture perspective and tracking important conceptual context. This manifests in various ways, which I’ll talk about shortly, but the end result is that I still feel like Claude requires enough intervention that my role is less like “manager” and more like senior pairing partner.

Claude often needs to be reminded to review documentation even if the link is in its instructions or the codebase somewhere. For example, when we were setting up GitHub CI, Claude starting building out a YAML file from scratch. I had a hunch that there was probably a page in the UV docs on the right way to do this, and suggested Claude check. Indeed there was, and it included some useful guidance. Now, it’s debatable whether doing this preemptively was in fact necessary, but in this case, part of the issue was that it had links to the uv documentation in its instructions and so easily could have checked. This is part of a pattern where in general its impulse on when to consult external sources seems to only capture a subset of the situations in which developers typically do so. Combined with the fact that Claude’s code is often being less reviewed than human-written code, this form of not-invented-here is especially risky in this context.

In my experience, Claude will also never spontaneously decide to do a major refactor. If asked for options, it will sometimes propose one, but it’s missing some kind of impulse senior engineers have to notice when they’re creating a mess, take a step back, and ask if there’s a better way. In our work on Fatebook MCP, I saw this with the integration test. Claude’s initial implementation did all the setup as part of the first test method. This was fine when there was only one, but quickly became out of control and error-prone once the test started including multiple different multi-step scenarios. This was interesting because I noticed things starting to get out of control relatively early on and even added a to-do to clean up the test at some point. Despite this, at no point while adding more and more scenarios did Claude ever say, “I think this is the point at which we really need to fix this.” Instead, I eventually decided enough was enough and proposed a concrete refactor for the tests to de-duplicate a lot of the setup.

I strongly suspect Claude lacks this impulse because it’s trained to complete tasks, not spontaneously propose changes to improve global coherence of, for example, a codebase in which a SWE-Bench task resides. As mentioned above though, it means that the responsibility for staying on top of a codebase’s health remains in the hand of the user. In my mind, this presents one of the biggest challenges for effective use of these tools, as often the intuition for when and how to adjust the codebase comes from active development (rather than pure review). Unfortunately, I don’t have any great ideas for how to solve this besides finding ways to to try and actively engage with the code being written, even if I’m not the one writing it.

Almost the entire discussion so far has focused on planning and writing code, but anyone who’s worked as a programmer knows that debugging often consumes as much time as writing code and is an as important skill for a programmer to cultivate. Claude’s debugging skills have improved a lot over the generations but remain lumpy. On one hand, Claude occasionally shows tactical brilliance in debugging, discovering the cause of an issue by reading the entire log and noticing a single line or pointer to what was going wrong. On the other hand, Claude’s fails at the consistently systematic approach to debugging often required for debugging hard problems. One case during Fatebook MCP’s development really highlighted this.

The Fatebook MCP server includes a list_questions method, which (shocker) lists questions given a set of filters. When Claude initially implemented this method and started testing it, instead of returning a list of questions, the method would just return the first question. This was clearly a problem. I started out by asking Claude to try to figure out what could be going wrong. In this situation, assuming they didn’t immediately know what was going wrong, a mature engineer would likely have approached this systematically. They’d recognize that if they checked what was being returned or seen at each step in the process between server endpoint handler and the logging in the client, they’d eventually narrow down to where the list object was getting converted to its first item. Claude… didn’t do this. Instead, it quickly locked on to a specific hypothesis for where list handling was going wrong and tried a bunch of different fixes. Since its hypothesis was wrong, this led it down a wild goose chase of various different list return methods, none of which solved the problem. Observing this, I eventually felt pity on both of us, and decided to prompt it to be more systematic as described above. Now before that process completed, I had a flash of insight and my hunch about what was wrong ended up being correct, but I’m confident the plodding approach would have led to the same conclusion.

This lack of debugging meta-skills means that, even more than for implementing, using Claude for serious debugging requires careful attention to and guidance for how to structure its process.

Last, I know it’s popular to say, but Claude still lacks some ineffable taste. This project wasn’t that architecturally complex so this manifested more in the small than in the large. I already mentioned the case of the ballooning integration test. Another case arose when I asked Claude to explore how we might strip detailed information out of list_question responses. Claude liked the idea of overriding our Pydantic model’s model.dump method to let us not return null fields. Like all questions of taste, whether this was a good solution is debatable, but I personally felt like it was a cure worse than the disease. The alternative of just having a separate question model for list responses seemed both more standard and less likely to cause issues down the road. The point here is not that Claude was wrong in this case per se. Instead, the issue is that here and elsewhere, I’ve found that it relies too heavily on canned reasons for its decisions and is too willing to be persuaded to the other side if I push it. The combination of these factors means that, even in cases where it has good taste, it can only reliably act as a generator of ideas rather than an arbitrator.

Tooling

The above focused on the models, but I feel that the tool and workflow present even more low-hanging fruit right now4. In particular, the biggest issue right now is that Claude Code is an amazing tool for authoring, but this leaves reviewing as both much less enjoyable and a productivity bottleneck. I mentioned above that I did a mix of code reading in Zed and GitHub’s PR interface.

Of the two, Zed is definitely more ergonomic for reviewing and inspecting Claude’s code. GitHub’s PR interface is just not set up for reviewing the throughput of changes Claude Code can make, as it was designed for the era in which human code writing took longer than reviewing (on average). On top of that, iterating on PR comments with remains clunky. It can either fetch comments using the gh CLI tool, which works but isn’t really designed to be used as a full service code review API, or using the @claude feature in GitHub. The latter is GitHub native but doesn’t have access to the relevant conversational history for a given feature, and so ends up analogous to asking a coworker who just started to review every PR.

Going back to Zed, as an editor, it’s a more natural tool for carefully reading, navigating, and testing code. However, like all code editors, Zed is more designed for viewing codebases than it is for viewing changes to codebases. It has Git diff support, but diffs are not a first class citizen in the way files are, meaning it can sometimes be hard to track what’s changed when reviewing Claude’s rapid fire updates to a codebase. To be fair, Claude Code has editor integrations, which are intended to help with this, but Zed doesn’t have one yet, so I couldn’t try this.

With the current state of these tools, I definitely have to apply a lot of discipline to consistently carefully review Claude’s code, and even then I think I miss much more than I would if I were writing it myself. I feel like there’s a huge opportunity to improve the experience here, taking inspiration from other areas such as human flight where high stakes human review is the norm, and am hopeful more people work on this.5

Conclusion

First off, I’m really happy I did this and feel that it was worth the time I put in. I now understand Claude Code’s strengths and weaknesses better, leaving me newly comfortable using Claude Code as part of my core development workflow. At least at first, I intend to experiment more with Claude Code for greenfield projects, prototypes, and clearly specified features. On the other hand, for complex systems where deep understanding and care matter more than velocity, I’ll likely continue to rely on an AI-integrated IDEs like Cursor or Zed (with AI features turned on). Because these editors integrate chat/agent more closely with editing, they give me more tactile engagement with the codebase than the agentic-first workflow. This seems to help me maintain a finger tip feel for the code even though I’m delegating substantial work. Without this, the deficits I discussed would leave me worried about not understanding my codebase well enough to be an effective steward of it. Given how fast things in AI move, I expect this to change, so view this as a snapshot of my views as of August 2025 rather than an eternal judgement.

In terms of how this experience affects my expectations for AI coding progress, my thoughts are mixed. Relative to my experience using them for mostly ML/AI tasks, the models were able to do much more of the work for this project themselves. I believe the productivity gains for web development are meaningful using the current best tools. Yet I also feel like reflecting on my experience highlighted some of the subtle but important gaps between these systems and human developers (many of which Alex Telford touched on here). In particular, there’s a fuzzy but real type of agency that involves not just doing things as told but “stewarding” of a project/system/thing. I feel like the models have a ways to go before they can be trusted in this role. Speculating about AI research progress is even more fraught, but I suspect (but am not confident) that bridging these gaps will require different types of RL tasks and environments than those currently used for post-training the models at coding.

Reflecting on this post itself, while I tried to include enough examples to be useful as a case study rather than just a list of conclusions, reviewing the raw transcripts and comparing them to the post leaves me dissatisfied. There’s still a yawning gap between the nuance and richness of the transcripts vs. what I’ve been enable to distill here. While I have some ideas for different types of writing and cases that might help, part of me thinks a better option is to follow Armin’s lead and stream myself working on something (personal). I’m not ready to commit to this now, but encouragement could certainly influence me.

If you made it to this point, thanks for reading. I’d especially love to hear others’ thoughts and experiences on this topic, especially if they disagree or differ or from my own.

To summarize, MCP (Model Context Protocol) servers provide a standardized protocol for AI models to interact with external tools and data sources.

As always, all bets are off when it comes to what future models will or won’t be capable of.

Based on a script Claude Code wrote that uses Claude Haiku 3.5 to classify messages.

Although I recognize the counterpoint that the models are improving so fast that too much optimization just leaves you with an obsolete workflow.

As part of their most recent raise, Zed is actively working towards a vision in which human and agent collaboration are a first-class citizen of their editor, so maybe they will be the ones to crack this!