In September 2022, I wrote a post describing a set of “omens” that, if observed, would indicate faster-than-expected AI progress. I framed many of these in the context of 0-5 years, but obviously AI moves too fast to wait until 2027 to review these. Consider this post an arbitrarily timed intermediate check in triggered by my sense that many of my omens had come to pass.

The rest of this post assumes you’ve skimmed the omens post, so if you haven’t already, I recommend doing that now.

Overall takeaways and learnings

I’m putting these at the beginning because I suspect most people won’t actually read through my omen-by-omen breakdown. But to understand where I’m coming from, you may have to read through at least some of my detailed reflections.

1. Progress was faster than I expected

A lot of my omens came to pass and it’s only mid-2025. Given that the earliest timeframe was 2022-2027, it’s clear that progress exceeded my expectations. ChatGPT rocketed to 400M weekly active users when I thought 1M would constitute an omen. Anthropic reportedly is on track for $3B in annualized revenue. AI achieved IMO silver medal performance years ahead of schedule. Context windows exploded from 4K to 10M+ tokens. Research milestones I placed in the 5-15 year bucket are already falling.

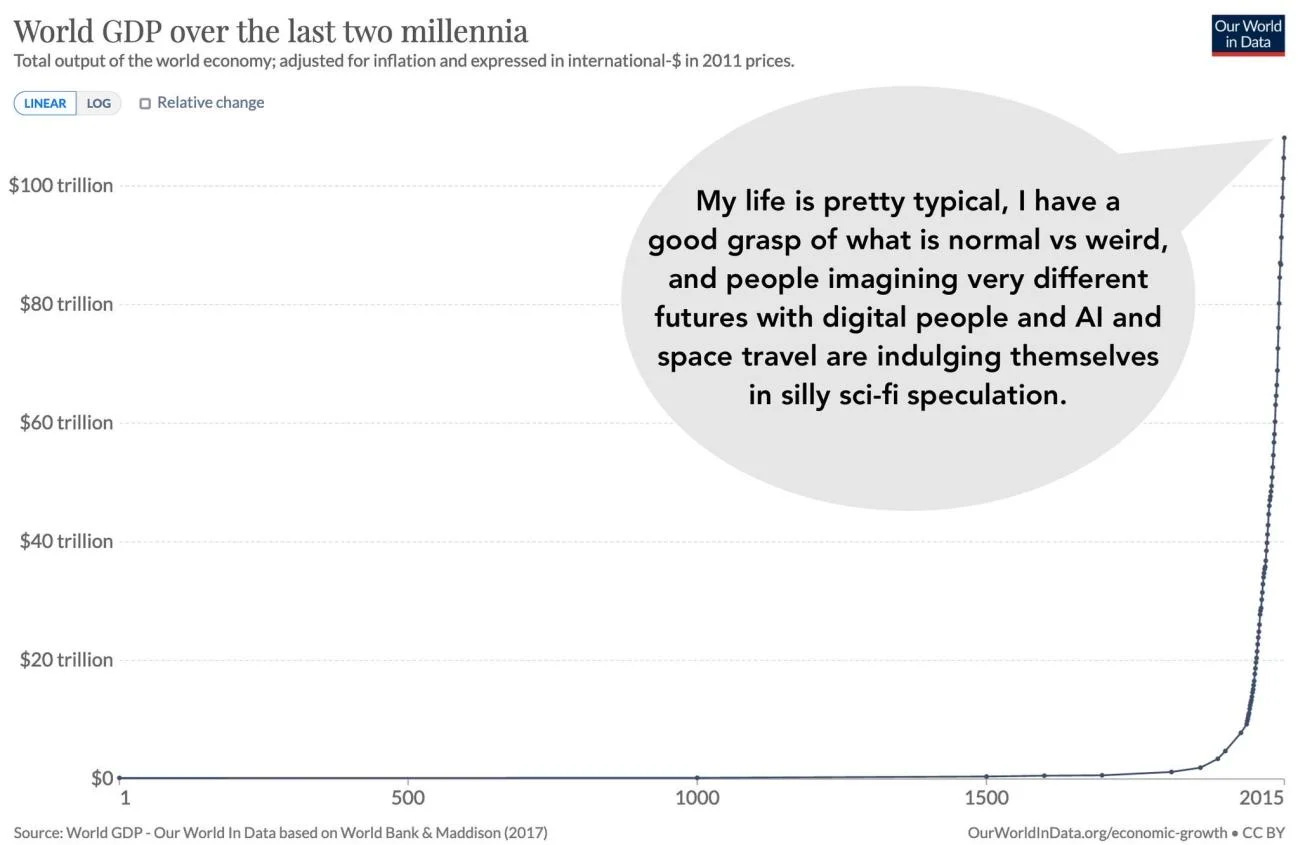

I’ll say more in a bit about the jagged frontier shortly, but I don’t want to bury the lede. It seems like we’re on track for transformative AI, with the software revolution happening faster than I expected. I don’t claim to know what the impact is going be, but this image does increasingly resonate.

I suppose this also means it’s time to start thinking about new omens…

2. Outside of software & SaaS, deployment lags behind what I would have expected if you’d only told me about the research progress

On one hand, many of the milestones I laid out for “open-ended reasoning” and “formidable intelligence” have already been hit. We have AI systems that can perform better than the median human on math, coding, and lots of other benchmarks. These systems can remain coherent in conversations for minutes to hours.

If, in 2022, you’d told me we would have systems capable of these feats, I would’ve guessed that these same systems would be much more capable of taking on a randomly sampled white collar task with the right scaffolding.

But so far the economic impact of AI has been muted. Yes, we have products with historically unprecedentedly fast adoption, but sector level impacts so far have been limited to coding, graphic design, and maybe one or two other areas. We have AI that can ace medical licensing exams but radiologists aren’t being replaced. We have superhuman Go players but not superhuman burger-flippers. The chasm between “AI can do X in controlled settings” and “AI is turning X capabilities into deployed products” remains large.

In hindsight, I think I missed at least two key things:

Agency is its own capability and it needs to be trained for. While it’s possible pre-training produces agency at sufficient scale, at today’s scales, big unlocks for agency/coherence seem to come from RL on tasks that require agentic reasoning.

The real world just has a lot of friction. For example, Waymo, one of the most incredible, delightful technological breakthroughs in my lifetime, works miraculously but continues to roll out relatively slowly. Similarly, while robotics adoption seems fast, it so far looks more like self-driving than AI chatbots in terms of the speed and level of difficulty.

Based on this, my current best guess is that coherent agentic systems with human-level adaptability, especially paired with RL, will accelerate economic impact for white collar work. On the meta level, I also have become more humble about my ability to anticipate bottlenecks.

3. Regulatory and sociological barriers are even more important than I realized

Throughout my omen reviews, a pattern emerged: the gap between “technically possible” and “actually deployed” is often explained by human factors rather than technical ones. Self-driving cars are arguably safer than human drivers but face regulatory hurdles and public skepticism. Radiologists could potentially be augmented or replaced but aren’t due to liability concerns and institutional inertia. I knew these factors mattered in 2022, but I underestimated just how much they’d determine deployment timelines.

4. I’m better at some types of predictions than others

Looking back, my most accurate predictions involved pure capability benchmarks (math competitions, context length) or consumer software adoption. My least accurate involved physical world deployment or anything requiring organizational change.

5. Capturing my holistic feeling about progress is hard

Even having recorded and now reviewed my omens, it’s hard for me to really feel the difference between my expectations in 2022 vs. now. It’s clear that I am more “AGI-pilled” now than then, but so much of my internal thinking has faded. The exercise of making concrete predictions helps, but there’s still something ineffable about how my views have shifted that resists quantification. This makes me think that going forward I should complement quantitative predictions with more qualitative belief snapshots - not just “what will happen” but “how do I feel about what’s happening.”

With that, let me break down how each category of omens performed to illustrate these takeaways in detail…

Section-by-section review

The three categories of omens showed very different patterns:

Rapid Economic Growth: Mixed bag. Pure software products crushed it. ChatGPT reached ~300 million weekly active users as of December 2024. GitHub Copilot had 1.3 M subscribers as of Feb. 2024. And OpenAI says 92 % of the Fortune 500 are experimenting with its models. Yet the physical-world roll-out still drags. As of May 2025, Waymo is at roughly 250,000 paid trips per week, despite reporting 81% fewer injury-causing crashes than a matched human benchmark.

Demonstrations of Formidable Intelligence: Surprisingly strong showing. IMO silver medal achieved, AI programmers starting to automate junior tasks, research assistants (Elicit, Consensus, Deep Research tools, Future House’s Platform) genuinely useful beyond search. But no Riemann Hypothesis proof or paradigm-shifting discoveries yet.

Open-ended Reasoning: Context windows exploded (4K→1M+ tokens), dialogue coherence solved, but continual learning remains behind despite the economic incentives.

Omen-by-omen review

Rapid economic growth

Self-driving car deployment continues apace and even accelerates, starting to replace a meaningful (10%) fraction of the ride share market.

For the purpose of evaluating this one, I’m just going to focus on Uber to simplify things. But I recognize that to grade it properly, I’d need to look at global rideshare mileage compared to global self-driving deployment.

One month ago, Waymo announced that they’re making 50,000 trips per week across three cities. In 2023, Uber logged an average of 26 million trips per day (source), so 500x more than Waymo. That means in order for Waymo alone to get to 10% of Uber’s trip volume, it would need to 50x in the next 3.5 years. Revenue tells a similar, although slightly more optimistic story. It’s hard to find exact numbers but my best guess is that Waymo earned around $1B in revenue this past year compared to Uber’s $37B, meaning they’d need to 3.7x their revenue to reach 10% of Uber’s (current). I dug into this a bunch more but the details are probably not super interesting, so putting them in a footnote1.

Stepping back, the main takeaway for me here is that it’s important to factor in sociological and regulatory factors when thinking about these omens. Looking at the data and news, self-driving deployment continues to be more rate limited by sociological factors such as people being inherently wary of self-driving cars and getting extremely upset when they do things like block roads than actual safety. In terms of actual safety, I’m reasonably confident that Waymo is close to, if not already a ways past median human safety, and I expect that gap to widen substantially before 2027.

At least one LLM-driven product such as Copilot has 1 million unique active users. One benchmark here is Figma, which according to this article from June, 2022, has 4 million unique users, There’s also a subtlety here that’s a little hard to deal with, which is that AI tools like DALL-E 2 get a ton of sign-ups but then experience rapid user drop-off. I’m just going to have to use my judgment here since I’m looking for widespread and persistent usage. I’d buy even faster acceleration if white collar AI tools routinely start getting used to make spreadsheets, powerpoints, etc. for businesses, but I suspect this is unlikely even in a relatively short timelines world due to structural factors.

In contrast to the self-driving car one, I obviously undershot here. Around 6 months ago, Sam Altman said that ChatGPT had 100 million weekly active users and recent reports suggest they’re now at 400 million. That alone would mean this omen has already come to pass, but ChatGPT is by no means the only product to have crossed this threshold. Github Copilot had 1.3 million paying customers as of February, Perplexity allegedly has 10 million monthly active users with rapid growth, and Gemini either has already crossed or soon will cross the 1 million active users threshold.

And while acceleration may be slightly slower on the enterprise front, it’s still rapid relative to the expectation I set. Anecdotally, many of my friends and acquaintances use at least some enterprise features of Copilot, Cursor, ChatGPT, Claude, or Gemini and I expect that number to increase as the “enterprise context problem” gets solved.

So consumer and enterprise adoption of AI tools has triggered my omen. Personally, I expect this growth to continue, but even if the adoption curve were to plateau as some are claiming, we’d already be well beyond the level of adoption I originally stated would constitute an omen of acceleration.

As will become a theme, the main takeaway here seems to be that pure software adoption has far fewer barriers to explosion than anything that interacts with the physical world and/or regulation.

AI manufacturing tools from companies like Covariant start achieving real market penetration.

Hard for me to grade this one. Investment in robotics is clearly skyrocketing, with multiple well-funded humanoid robotics companies (Figure, 1X, Kyle Vogt and co’s stealth company) entering the scene as well as competitors to Covariant. Yet, as far as I know, “real market penetration” has not yet been achieved. Despite that, I’m still more optimistic than I was at the time of writing. Scaling clearly works for robotics, so the outstanding Qs for me are:

How hard is it to go from 1 to 5 or however many 9s you need to replace a human?

How much does (waves hands around) “physical world messiness” slow down rapid deployment?

Does China leapfrog here because their manufacturing base is more dynamic and further along on the, mostly AI free, automation adoption curve?

How much does software recursive self-improvement translate to better AI for hardware applications?

Radiologists and pathologists at least become more productive as a result of AI tooling. I controversially do think they could probably be replaced in the medium term if not the short but I highly highly doubt they will be for regulatory capture + extreme precautionary principle reasons.

Depending on who I ask, radiology and pathology AI tools are “basically there” or “still making obvious mistakes”. From a cursory look at various reports and stats, I came away feeling like adoption is growing, but true replacement or otherwise disruptive usage isn’t really happening. This is based on the fact that supposedly around 30% of radiologists use some sort of AI tool in their work, but most seem to be doing so in a cursory way. On top of that, the number of radiologists practicing is, if anything, growing too slowly to meet demand.

On the flip side, research progress feels rapid, with multimodal models like Med-Gemini and AMIE continuing to make Pareto improvements on medical tasks, including radiology and pathology related evals. But to be truly transformative, these tools need to be integrated into actual clinical practice, which itself will involve multi-year software projects.

Together, this reinforces my takeaway that interaction with the physical world, legacy systems, and regulatory barriers all create overhangs that slow the translation of sufficient capabilities to real world disruptive deployment.

AI systems start seeing slow but real adoption in food industry tasks like making burgers and taking orders. Or for another example, automated coffee machines like Cafe X (which I recently used on a layover in SF) spread to more airports or similar settings.

I framed this as focused on last mile tasks such as cooking and serving. My sense is not much has changed here since I wrote this, although the investment in humanoid robotics presumably is partly targeted at these types of tasks.

One thing I underrated at the time was innovation upstream in the supply chain. Although it’s not as sexy as LLMs and AGI, it seems like more prosaic ML systems are slowly but surely making their way into multiple stages of the food supply chain. Be it more accurate nowcasting, drones for data collection, or automated tractors, my sense is that ML is a key piece in ensuring food production continues to become more efficient.

My main takeaway here is that it’s a bit early for the last mile food production sorts of tasks and I’d be even more surprised if we see substantial replacement by 2027 even with rapid research progress. A secondary takeaway is that for specialty areas like this, it’s hard for me to even evaluate omens unless they’re framed more specifically or the progress is extremely obvious even to a casual observer.

LLM companies’ products achieving promising uptake.

See above, clearly happening.

Signs that robotics systems that help humans are on the cusp of being useful. E.g., Google or Everyday Robotics demonstrates an AI janitor that actually operates autonomously in their or another office.

Mostly covered above, this isn’t happening yet, although 1x.tech and the Kyle Vogt and co. stealth startup I mentioned are working on home robotics. This is one of the places I’m most uncertain. On one hand, the demand for home robotics is so high. On the other, getting things to be general and safe enough to be trusted seems hard! If I’m using a home robot besides a vacuum or another bespoke device by end of 2027, I’ll be surprised. By 2030, I think it’s much more likely (50% chance)?

Demonstrations of formidable intelligence

Seems plausible but unlikely given recent Math Arena results. It also depends on the resolution criteria. AlphaProof plus AlphaGeometry already performed at silver medal level in the last IMO, but those are specialized models. That said, I expect by 2027, reasoning models will definitely be performing at IMO Gold level. Again, stepping back, I think math is going to be an area where we’ll get other omens of acceleration that I didn’t predict, for a few reasons:

It lacks any of the physical world barriers of other sciences like biology.

Synthetic data generation & verifiable RL is much easier using things like proof assistants.

We’ve seen crazy rapid progress on math benchmarks over the past few years.

AI proves an important, broad theorem that humans have been unable to prove up until now

Examples: Riemann Hypothesis

Hasn’t happened yet, but by 2027, I think this is more likely than I thought it was at the time of writing. I am overall more sympathetic to Christian’s perspective here than Francois’s.

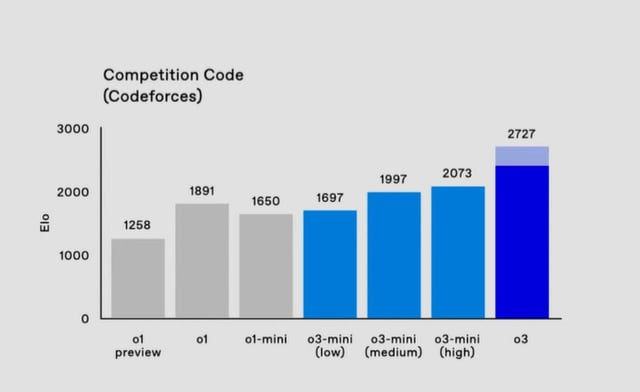

AI programming model outperforms best competitive programmers (Manifold questions: 1).

Unless something wild happens to stop progress, we’re definitely gonna be there by 2027. o3 is already at something like 99+%ile performance on CodeForces, and my sense is there’s still at least one more OOM to go of just scaling up the same verifiable RL approaches.

AI programmers start replacing junior programmers in industry. Example: Can I use an AI system to take a first pass at designing a web app that I’ve described in text/maybe mock-ups?

It’s hard to tell how much this is already happening, but I am pretty confident it’ll be happening by 2027. Today, we’re already seeing incremental shifts towards, especially smaller companies, hiring fewer junior engineers. But it’s hard to tell if that has much to do with AI or just the end of ZIRP.

Looking at the example I gave back then, I’ve realized that replacement is more complicated than just looking at individual capabilities. Replacing someone requires either reorganizing surrounding systems and processes or replacing the entire bundle of tasks they do. In the case of a software engineer, this means you don’t just need to replace writing code, but also debugging issues that come up, responding to users you support, metarational bridging between requirements and implementation, and much more.

AI programming system can automatically and reliably perform large scale refactorings. Examples include migrating a codebase from one framework to another, “remove huggingface from this modeling script and replace it with pytorch”, and other similar tasks.

I think tools like Claude Code, Devin, Codex, etc. aren’t that far from this, but they’re not there yet either. Concretely, I wouldn’t trust them to autonomously do a large scale refactor in a legacy codebase, especially not without strong human guardrails (human-written tests and manual checks). Related to that, I have been surprised how little research and evals have focused on this. It seems like an especially tractable task because you can lean heavily on golden master style tests to confirm correctness.

AI research assistants helpful enough for analyzing papers that I actually trust their responses to questions vs. just using them as glorified search.

Yep. Elicit now has 2M+ users and both it and other tools like all the Deep Research variants and Future House’s platform enable automated literature reviews that go well beyond search. I use these tools regularly and trust them for initial research passes. I was way too pessimistic here - I originally gave this <10% probability!

There is one caveat, which is that I do not yet trust these tools’ conclusions without substantial spot checking. Especially with Deep Research, I’ve found that it is often able to write dangerously plausible sounding things that do not hold up when I dig into the details of the cited evidence. This feels like an example of the “taste/skepticism gap” that exists across a few domains.

AI systems beat humans at forecasting medium to long term events.

Despite some interesting research, I don’t know if we’re there yet, but we’re close. With the right scaffolding, I wouldn’t be surprised to see Claude Opus 4, o3, or Gemini 2.5 Pro perform at the top of a forecasting competition. For that reason, I expect this to fall soon, certainly by end of 2027.

AI engineering systems design new materials or artifacts that unblock a key bottleneck in some engineering process. Examples:

Nanosystem that has a previous unseen capability designed primarily by an AI system.

New material better than a material that’s had a lot of human effort put into improving it such as carbon fiber and is actually usable.

Models continue to knock down biological prediction tasks. Examples:

Protein complex structure prediction for large protein assemblies vs. small sets of monomers. As a close to home example, if a model could predict the entire structure of the AAV capsid, that would be very impressive.

In a similar vein, if structure models become able to predict variant effects accurately, that would constitute greater than expected progress.

Accurately predicting time series dynamics vs. static structures.

Improved receptor binding prediction, with bonus points for if it’s clearly getting used to design drugs more quickly/effectively.

Interestingly, I’d say I was relatively calibrated here. While AlphaFold 3 is incredibly impressive, it cannot predict full viral complex structures. By 2027, I do expect that much of binding becomes fully predictable with models, especially in commercially valuable areas. I’m less sure on how quickly dynamics modeling will progress, but I expect it to get a lot better between now and end of 2027.

Various forms of progress towards the world described in A Future History of Biomedical Progress in the next 5 years. Examples:

Models that learn from scientific papers and writing actually doing something useful. (I will put myself out there for this one and say that I think this is very (<10%) unlikely.

Models drive automated robotic laboratories that both run experiments and automatically decide which experiments to perform next.

I’ve definitely become more optimistic about models that learn from the literature and design experiments. Work like PaperQA 2 and all the Deep Research tools show that models can understand literature better than I expected, and vision model improvements are a big unlock for, especially biology related, literature understanding. On the experimental design front, we are seeing initial examples (1, 2, 3) of working systems, and the number of commercial players is growing rapidly.

Progress towards open-ended reasoning

The final category of omens focused on longer time horizon reasoning and continual learning. Here the results were more mixed:

RL agents beat humans at hard exploration games like Montezuma’s Revenge

This happened but it didn’t matter that much. Multiple algorithms (MuZero, Agent57) achieved superhuman scores with 2M+ vs the previous human record of ~11K.

Today, the more relevant question is how quickly agents will progress on real-time adaptation and learning from experience, partly measured by performance on (untrained on) games like Pokemon. The good news is more and more benchmarks are coming out to track this, so we should be able to observe progress in real time.

Large language model context lengths allowing discussion of entire books

We may not be a 100% there, but by end of 2027, we surely will be. We went from ~4K tokens to Claude’s 200K and Gemini’s 1-10M tokens. Some experimental models allegedly even handle 100M+ tokens.

Dialogue systems maintaining coherence >5 minutes

With current context sizes, this clearly happened. I can easily have a 5m conversation with pretty much any frontier LLM and be confident they’ll track what I said at the beginning. This feels so obvious now it’s hard to remember when it was questionable.

Robotics systems learning in real-time from mistakes

I’m not sure about this one. I’m just too much of an outsider to say.

Astute readers will notice there’s a big gap here between trip volume multiples and revenue. I don’t know how to explain this and it makes me think one of my numbers is off by an order of magnitude… The former estimate makes the omen seem unlikely to come to pass in the next 3.5 years, whereas the latter makes it seem quite possible. This makes evaluating plausibility hard, so I’m going to cheat and just trust the estimated number of trips over revenue.Evaluating plausibility based on that, Waymo catching up to Uber by September 2027 seems possible but not unlikely to me given how their rollout has proceeded to date. Just expanding to the top 10-20 major cities in the US presumably gets them around 5x growth, but regulatory tailwinds combined with their extremely careful approach make me think they’re more likely to continue at an only slightly. For example, in 2024, they’re seemingly only expanding to Austin and the rest of LA.

I’m not factoring in other rideshare platforms here, since Waymo seems to be far and away the furthest ahead, but Tesla is a different story. In 2023, Tesla produced and delivered almost 2M vehicles, and a month and a half ago, Tesla FSD hit 1B miles driven. As part of that, Tesla claimed half a billion dollars in revenue from FSD 2023. Uber’s revenue in 2023 was $37B, so what really matters for the purposes of the omen is growth. and at least today, growth of FSD sales seems to be slowing down.

That said, I’m definitely not counting Tesla out here. At current subscription costs of $100/month, Tesla would need ~3M subscribers to hit 10% Uber’s current revenue. According to this reddit thread, there are around 2M Teslas on the road today, so even if FSD rolled out to every single Tesla on the road, there’d still be a 1.5x gap to close with Uber’s current revenue. And realistically, not every Tesla owner will pay for FSD. On the other hand, I will never fully count out Elon and if Tesla can reignite growth even a bit beyond Uber’s, hitting 10% of the market in terms of revenue (and mileage) before 2027 seems quite possible.